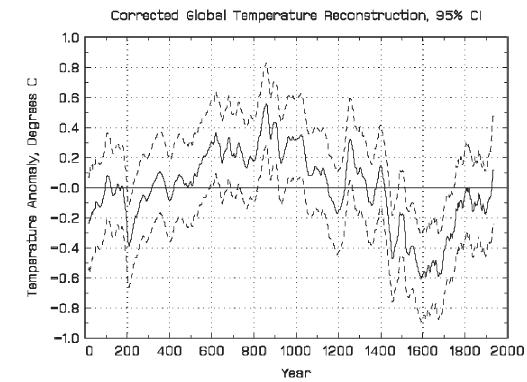

I have posted many times on the numerous problems with the historic temperature reconstructions that were used in Mann’s now-famous "hockey stick." I don’t have any problems with scientists trying to recreate history from fragmentary evidence, but I do have a problem when they overestimate the certainty of their findings or enter the analysis trying to reach a particular outcome. Just as an archaeologist must admit there is only so much that can be inferred from a single Roman coin found in the dirt, we must accept the limit to how good trees are as thermometers. The problem with tree rings (the primary source for Mann’s hockey stick) is that they vary in width for any number of reasons, only one of which is temperature.

One of the issues scientists are facing with tree ring analyses is called "divergence." Basically, when tree rings are measured, they have "data" in the form of rings and ring widths going back as much as 1000 years (if you pick the right tree!) This data must be scaled — a ring width variation of .02mm must be scaled in some way so that it translates to a temperature variation. What scientists do is take the last few decades of tree rings, for which we have simultaneous surface temperature recordings, and scale the two data sets against each other. Then they can use this scale when going backwards to convert ring widths to temperatures.

But a funny thing happened on the way to the Nobel Prize ceremony. It turns out that if you go back to the same trees 10 years later and gather updated samples, the ring widths, based on the scaling factors derived previously, do not match well with what we know current temperatures to be.

The initial reaction from Mann and his peers was to try to save their analysis by arguing that there was some other modern anthropogenic effect that was throwing off the scaling for current temperatures (though no one could name what such an effect might be). Upon further reflection, though, scientists are starting to wonder whether tree rings have much predictive power at all. Even Keith Briffa, the man brought into the fourth IPCC to try to save the hockey stick after Mann was discredited, has recently expressed concerns:

There exists very large potential for over-calibration in multiple regressions and in spatial reconstructions, due to numerous chronology predictors (lag variables or networks of chronologies – even when using PC regression techniques). Frequently, the much vaunted ‘verification’ of tree-ring regression equations is of limited rigour, and tells us virtually nothing about the validity of long-timescale climate estimates or those that represent extrapolations beyond the range of calibrated variability.

Using smoothed data from multiple source regions, it is all too easy to calibrate large scale (NH) temperature trends, perhaps by chance alone.

But this is what really got me the other day. Steve McIntyre (who else) has a post that analyzes each of the tree ring series in the latest Mann hockey stick. Apparently, each series has a calibration period, where the scaling is set, and a verification period, an additional period for which we have measured temperature data to verify the scaling. A couple of points were obvious as he stepped through each series:

-

Each series individually has terrible predictive ability. Each were able to be scaled, but each has so much noise in them that in many cases, standard T-tests can’t even be run and when they are, confidence intervals are huge. For example, the series NOAMER PC1 (the series McIntyre showed years ago dominates the hockey stick) predicts that the mean temperature value in the verification period should be between -1C and -16C. For a mean temperature, this is an unbelievably wide range. To give one a sense of scale, that is a 27F range, which is roughly equivalent to the difference in average annual temperatures between Phoenix and Minneapolis! A temperature forecast with error bars that could encompass both Phoenix and Minneapolis is not very useful.

-

Even with the huge confidence intervals above, the series above does not verify! (the verification value is -.19). In fact, only one out of numerous data series individually verifies, and even this one was manually fudged to make it work.

Steve McIntyre is a very careful and fair person, so he allows that even if none of the series individually verify or have much predictive power, they might when combined. I am not a statistician, so I will leave that to him to think about, but I know my response — if all of the series are of low value individually, their value is not going to increase when combined. They may accidentally in mass hit some verification value, but we should accept that as an accident, not as some sort of true signal emerging from the data.