I can never make this point too often: When considering the scientific basis for climate action, the issue is not the warming caused directly by CO2. Most scientists, even the catastrophists, agree that this is on the order of magnitude of 1C per doubling of CO2 from 280ppm pre-industrial to 560ppm (to be reached sometime late this century). The catastrophe comes entirely from assumptions of positive feedback which multiplies what would be nuisance level warming to catastrophic levels.

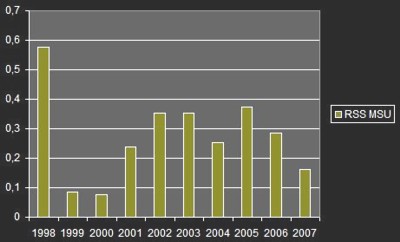

My simple analysis shows positive feedbacks appear to be really small or non-existent, at least over the last 120 years. Other studies show higher feedbacks, but Roy Spencer has published a new study showing that these studies are over-estimating feedback.

And the fundamental issue can be demonstrated with this simple example: When we analyze interannual variations in, say, surface temperature and clouds, and we diagnose what we believe to be a positive feedback (say, low cloud coverage decreasing with increasing surface temperature), we are implicitly assuming that the surface temperature change caused the cloud change — and not the other way around.

This issue is critical because, to the extent that non-feedback sources of cloud variability cause surface temperature change, it will always look like a positive feedback using the conventional diagnostic approach. It is even possible to diagnose a positive feedback when, in fact, a negative feedback really exists.

I hope you can see from this that the separation of cause from effect in the climate system is absolutely critical. The widespread use of seasonally-averaged or yearly-averaged quantities for climate model validation is NOT sufficient to validate model feedbacks! This is because the time averaging actually destroys most, if not all, evidence (e.g. time lags) of what caused the observed relationship in the first place. Since both feedbacks and non-feedback forcings will typically be intermingled in real climate data, it is not a trivial effort to determine the relative sizes of each.

While we used the example of random daily low cloud variations over the ocean in our simple model (which were then combined with specified negative or positive cloud feedbacks), the same issue can be raised about any kind of feedback.

Notice that the potential positive bias in model feedbacks can, in some sense, be attributed to a lack of model “complexity” compared to the real climate system. By “complexity” here I mean cloud variability which is not simply the result of a cloud feedback on surface temperature. This lack of complexity in the model then requires the model to have positive feedback built into it (explicitly or implicitly) in order for the model to agree with what looks like positive feedback in the observations.

Also note that the non-feedback cloud variability can even be caused by…(gasp)…the cloud feedback itself!

Let’s say there is a weak negative cloud feedback in nature. But superimposed upon this feedback is noise. For instance, warm SST pulses cause corresponding increases in low cloud coverage, but superimposed upon those cloud pulses are random cloud noise. That cloud noise will then cause some amount of SST variability that then looks like positive cloud feedback, even though the real cloud feedback is negative.

I don’t think I can over-emphasize the potential importance of this issue. It has been largely ignored — although Bill Rossow has been preaching on this same issue for years, but phrasing it in terms of the potential nonlinearity of, and interactions between, feedbacks. Similarly, Stephen’s 2005 J. Climate review paper on cloud feedbacks spent quite a bit of time emphasizing the problems with conventional cloud feedback diagnosis.