I began with an 85-page book. I shortened that to a 50-minute film, and then a 9-minute film. With that experience, I think I can now pull out and summarize in just a few paragraphs why we should not fear catastrophic global warming. Here goes:

Climate catastrophists often argue that global warming theory is "settled science." And they are right in one respect: We have a pretty good understanding of how CO2 can act as a greenhouse gas and cause the earth to warm. What is well agreed upon, but is not well communicated in the media, is that a doubling of CO2, without other effects that we will discuss in a moment, will heat the earth about 1 degree Celsius (plus or minus a few tenths). This is not some skeptic’s hallucination — this is straight out of the IPCC third and fourth assessments. CO2, acting alone, warms the Earth only slowly, and at this rate we would see less than a degree of warming over the next century, more of a nuisance than a catastrophe.

But some scientists do come up with catastrophic warming forecasts. They do so by assuming that our Earth’s climate is dominated by positive feedbacks that multiply the initial warming from CO2 by a factor of three, four, five or more. This is a key point — the catastrophe does not come from the science of greenhouse gases, but from separate hypotheses that the earth’s climate is dominated by positive feedback. This is why saying that greenhouse gas theory is "settled" is irrelevant to the argument about catastrophic forecasts. Because these positive feedbacks are NOT settled science. In fact, the IPCC admits it does not even know the sign of the most important effect (water vapor), much less its magnitude. They assume that the net effect is positive, but they are on very shaky ground doing so, particularly since having long-term stable systems like climate dominated by positive feedback is a highly improbable.

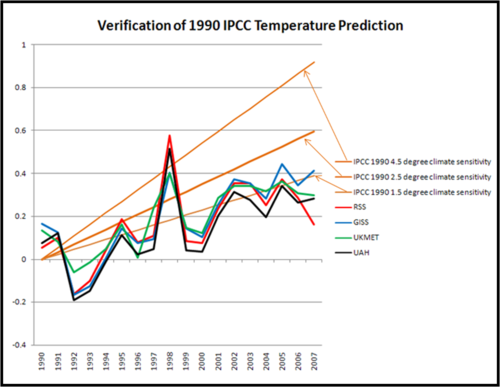

And, in fact, with the 100 or so years of measurements we have for temperature and CO2, empirical evidence does not support these high positive feedbacks. Even if we assign all the 20th century warming to CO2, which is unlikely, our current warming rates imply close to zero feedback. If there are other causes for measured 20th century warming other than CO2, thereby reducing the warming we blame on CO2, then the last century’s experience implies negative rather than positive feedback in the system. As a result, it should not be surprising that high feedback-driven forecasts from the 1990 IPCC reports have proven to be way too high vs. actual experience (something the IPCC has since admitted).

However, climate scientists are unwilling to back down from the thin branch they have crawled out on. Rather than reduce their feedback assumptions to non-catastrophic levels, they currently hypothesize a second man-made cooling effect that is masking all this feedback-driven warming. They claim now that man-made sulfate aerosols and black carbon are cooling the earth, and when some day these pollutants are reduced, we will see huge catch-up warming. If anything, this cooling effect is even less understood than feedback. What we do know is that, unlike CO2, the effects of these aerosols are short-lived and therefore localized, making it unlikely they are providing sufficient masking to make catastrophic forecasts viable. I go into several reality checks in my videos, but here is a quick one: Nearly all the man-made cooling aerosols are in the northern hemisphere, meaning that most all the cooling effect should be there — but the northern hemisphere has actually exhibited most of the world’s warming over the past 30 years, while the south has hardly warmed at all.

In sum, to believe catastrophic warming forecasts, one has to believe both of the following:

- The climate is dominated by strong positive feedback, despite our experience with other stable systems that says this is unlikely and despite our measurements over the last 100 years that have seen no such feedback levels.

- Substantial warming, of 1C or more, is being masked by aerosols, despite the fact that aerosols really only have strong presence over 5-10% of the globe and despite the fact that the cooler part of the world has been the one without the aerosols.

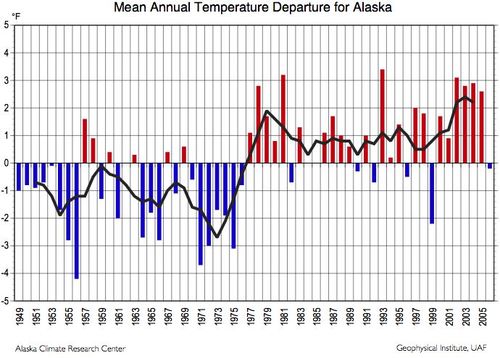

Here’s what this means: Man will cause, at most, about a degree of warming over the next century. Most of this warming will be concentrated in raising minimum temperatures at night rather than maximum daytime temperatures (this is why, despite some measured average warming, the US has not seen an increase of late in maximum temperature records set). There are many reasons to believe that man’s actual effect will be less than 1 degree, and that whatever effect we do have will be lost in the natural cyclical variations the climate experiences, but we are only just now starting to understand.

To keep this relatively short, I have left out all the numbers and such. To see the graphs and numbers and sources, check out my new climate video, or my longer original video, or download my book for free.

Update: Commenters are correct that positive feedback dominated systems can be stable as long as the feedback percentage is less than 100%. By trying to get too compact in my arguments, I combined a couple of things. First, there are many catastrophists that argue that climate IS in fact dominated by feedback over 100% — anyone who talks of "tipping points" is effectively saying this. The argument about instability making stable processes impossible certainly applies to these folks’ logic. Further, even positive feedback <100% makes a system highly subject to dramatic variations. But Mann et. al. are already on the record saying that without man, global temperatures are unbelievably stable and move in extremely narrow ranges. It is hard to imagine this to be true in a climate system dominated by positive feedback, particularly when it is beset all the time with dramatic perturbations, from volcanoes to the Maunder Minimum.

To some extent, climate catastrophists are in a bind. If historic temperatures show a lot of variance, then a strong argument can be made that a large portion of 20th century warming is natural occilation. If historic temperatures move only in narrow ranges, they have a very difficult time justifying that the climate is dominated by positive feedbacks of 60-80%,

The point to remember, though, is that irregardless of likelihood, the historical temperature record simply does not support assumptions of feedback much larger than zero. Yes, time delays and lags make a small difference, but all one has to do is compare current temperatures to CO2 levels 12-15 years ago to account for this lag and one still gets absolutely no empirical support for large positive feedbacks.

Remember this when someone says that greenhouse gas theory is "Settled." It may or may not be, but the catastrophe does not come directly from greenhouse gasses. Alone, they cause at most nuisance warming. The catastrophe comes from substantial positive feedback (it takes 60-80% levels to get climate sensitivities of 3-5C) which is far from settled science.