Isn’t there someone credible in the climate field that can really try to sort out the increasing divergence of satellite vs. surface temperature records? I know there are critical studies to be done on the effect of global warming on acne, but I would think actually having an idea of how much the world is currently warming might be an important fact in the science of global warming.

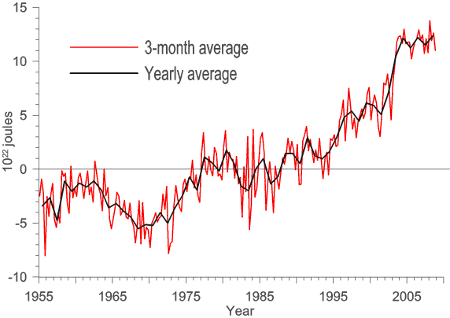

The problem is that surface temperature records are showing a lot more warming than satellite records. This is a screen cap. from Global Warming at a Glance on JunkScience.com. The numbers in red are anomalies, and represent deviations from a arbitrary period whose average is set to zero (this period is different for the different metrics). Because the absolute values of the anamolies are not directly comparable, look at the rankings instead:

Here is the connundrum — the two surface records (GISTEMP and Hadley CRUT3) showed May of 2009 as the fifth hottest in over a century of readings. The two satellite records showed it as only the 16th hottest in 31 years of satellite records. It is hard to call something settled science when even a basic question like “was last month hotter or colder than average” can’t be answered with authority.

Skeptics have their answer, which have been shown on this site multiple times. Much of the surface temperature record is subject to site location biases, urban warming effects, and huge gaps in coverage. Moreover, instrumentation changes over time have introduced biases and the GISS and Hadley Center have both added “correction” factors of dubious quality that they refuse to release the detailed methodology or source code behind.

There are a lot of good reasons to support modern satellite measurement. In fact, satellite measurement has taken over many major climate monitoring functions, such as measurement of arctic ice extent and solar irradiance. Temperature measurement is the one exception. One is left with a suspicion that the only barrier to acceptance of the satellite records is that alarmists don’t like the answer they are giving.

If satellite records have some fundamental problem that exceeds those documented in the surface temperature record, then it is time to come forward with the analysis or else suck it up and accept them as a superior measurement source.

Postscript: It is possible to compare the absolute values of the anamolies if the averages are adjusted to the same zero for the same period. When I did so, to compare UAH and Hadley CRUT3, I found the Hadley anamoly had to be reduced by about 0.1C to get them on the same basis. This implies Hadley is reading about 0.2C more warming over the last 20-25 years, or about 0.1C/decade.