A month or two ago, after Kevin Drum (a leftish supporter of strong AGW theory) posted a chart on his site that looked like BS to me. I posted my quick reactions to the chart here, and then after talking to the data owner in Washington followed up here.

The gist of my comments were that the trend in the data didn’t make any sense, and upon checking with the data owner, it turns out much of the trend is due to changes in the data collection process. I stick by that conclusion, though not some of the other suppositions in those posts.

I was excited to see Dr. Mills response (thanks to reader Charlie Allen for the heads up). I will quote much of it, but to make sure I can’t be accused of cherry-picking, here is his whole post here. I would comment there, but alas, unlike this site, Dr. Mills chooses not to allow comments.

So here we go:

Two blog entries [1-online | PDF] [2-online | PDF] [Accessed June 18, 2009] mischaracterize analysis in a new report entitled Global Climate Change Impacts in the United States. The blogger (a self-admitted “amateur”) created a straw man argument by asserting that the chart was presented as evidence of global climate change and was not verified with the primary source. The blog’s errors have been propagated to other web sites without further fact checking or due diligence. (The use of profanity in the title of the first entry is additionally unprofessional.)

Uh, oh, the dreaded “amateur.” Mea Culpa. I am a trained physicist and engineer. I don’t remember many colleges handing out “climate” degrees in 1984, so I try not to overstate my knowledge. As to using “bullsh*t” in the title, the initial post was “I am calling bullsh*t on this chart.” Sorry, I don’t feel bad about that given the original post was a response to a post on a political blog.

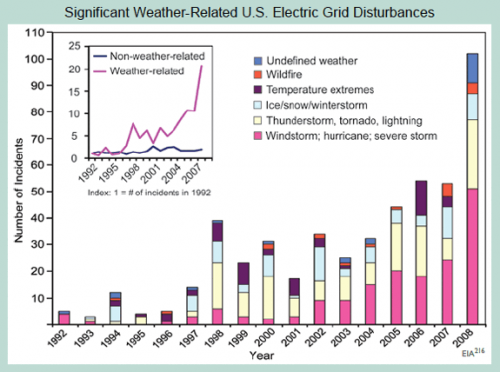

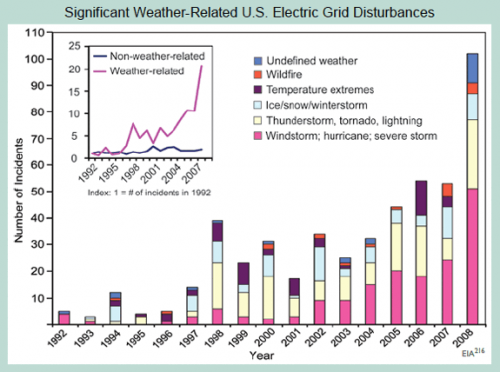

The underlying database—created by the U.S. Department of Energy’s Energy Information Administration—contains approximately 930 grid-disruption events taking place between 1992 and 2008, affecting 135 million electric customers.

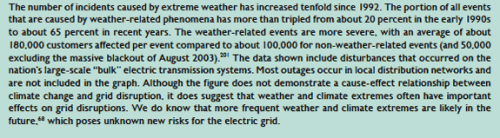

As noted in the caption to the figure on page 58 of our report (shown above)—which was masked in the blogger’s critique—

First, I am happy to admit errors where I make them (I wonder if that is why I am still an “amateur”). It was wrong of me to post the chart without the caption. My only defense was that I copied the chart from, and was responding to its use on, Kevin Drum’s site and he too omitted the caption. I really was not trying to hide what was there. I am on the road and don’t have the original but here it is from Dr. Mills’ post.

Anyway, to continue…

As noted in the caption to the figure on page 58 of our report (shown above)—which was masked in the blogger’s critique—we expressly state a quite different finding than that imputed by the blogger, noting with care that we do not attribute these events to anthropogenic climate change, but do consider the grid vulnerable to extreme weather today and increasingly so as climate change progresses, i.e.:

“Although the figure does not demonstrate a cause-effect relationship between climate change and grid disruption, it does suggest that weather and climate extremes often have important effects on grid disruptions.”

The associated text in the report states the following, citing a major peer-reviewed federal study on the energy sector’s vulnerability to climate change:

“The electricity grid is also vulnerable to climate change effects, from temperature changes to severe weather events.”

To Dr. Mills’ point that I misinterpreted him — if all he wanted to say was that the electrical grid could be disturbed by weather or was vulnerable to climate change, fine. I mean, duh. If there are more tornadoes knocking about, more electrical lines will come down. But if that was Dr. Mills ONLY point, then why did he write (emphasis added):

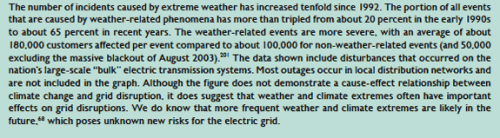

The number of incidents caused by extreme weather has increased tenfold since 1992. The portion of all events that are caused by weather-related phenomena has more than tripled from about 20 percent in the early 1990s to about 65 percent in recent years. The weather-related events are more severe…

He is saying flat out that the grid IS being disturbed 10x more often and more severely by weather. It doesn’t even say “reported” incidents or “may have” — it is quite definitive. So which one of us is trying to create a straw man? It is these statements that I previously claimed the data did not support, and I stand by my analysis on that.

And it’s not like there is some conspiracy of skeptics to mis-interpret Mr. Mills. Kevin Drum, a huge cheerleader for catastrophic AGW, said about this chart:

So here’s your chart of the day: a 15-year history of electrical grid problems caused by increasingly extreme weather.

I will skip the next bit, wherein it appears that Dr. Mills is agreeing with my point that aging and increased capacity utilization on the grid could potentially increase weather-related grid outages without any actual change in the weather (just from the grid being more sensitive or vulnerable)

OK, so next is where Mr. Mills weighs in on the key issue of the data set being a poor proxy, given the fact that most of the increase in the chart are due to better reporting rather than changes in the underlying phenomenon:

The potential for sampling bias was in fact identified early-on within the author team and—contrary to the blogger’s accusation—contact was in fact made with the person responsible for the data collection project at the US Energy Information Administration on June 10, 2008 (and with the same individual the blogger claims to have spoken to). At that time the material was discussed for an hour with the EIA official, who affirmed the relative growth was in weather-related events and that it could not be construed as an artifact of data collection changes, etc. That, and other points in this response, were re-affirmed through a follow up discussion in June 2009.

In fact, the analysis understates the scale of weather-related events in at least three ways:

- EIA noted that there are probably a higher proportion of weather events missing from their time series than non-weather ones (due to minimum threshold impacts required for inclusion, and under-reporting in thunderstorm-prone regions of the heartland).

- There was at least one change in EIA’s methodology that would have over-stated the growth in non-weather events, i.e., they added cyber attacks and islanding in 2001, which are both “non-weather-related”.

- Many of the events are described in ways that could be weather-related (e.g. “transmission interruption”) but not enough information is provided. We code such events as non-weather-related.

Dr. Mills does not like me using the “BS” word, so I will just say this is the purest caca. I want a single disinterested scientist to defend what Dr. Mills is saying. Remember:

- Prior to 1998, just about all the data is missing. There were pushes in 2001 and 2008 to try to fix under reporting. Far from denying this, Dr. Mills reports the same facts. So no matter how much dancing he does, much of the trend here is driven by increased reporting, not the underlying phenomenon. Again, the underlying phenomenon may exist, but it certainly is not a 10x increase as reported in the caption.

- The fact that a higher proportion of the missing data is weather-related just underlines the point that the historic weather-related outage data is a nearly meaningless source of trend data for weather-related outages.

- His bullet points are written as if the totals matter, but the point of the chart was never totals. I never said he was overstating weather related outages today. The numbers in 2008 may still be (and probably are) understated. And I have no idea even if 50 or 80 is high or low, so absolute values have no meaning to me anyway. The chart was designed to portray a trend — remember that first line of the caption “The number of incidents caused by extreme weather has increased tenfold since 1992. ” — not a point on absolute values. What matters is therefore not how much is missing, but how much is missing in the early years as compared to the later years.

- In my original post I wrote, as Dr. Mills does, that the EIA data owner thinks there is a weather trend in the data if you really had quality data. Fine. But it is way, way less of a trend than shown in this chart. And besides, when did the standards of “peer reviewed science” stoop to include estimates of government data analysts as to what the trend in the data would be if the data weren’t corrupted so badly. (Also, the data analyst was only familiar with the data back to 1998 — the chart started in 1992.

- Dr. Mills was aware that the data had huge gaps before publication. Where was the disclosure? I didn’t see any disclosure. I wonder if there was such disclosure in the peer reviewed study that used this data (my understanding is that there must have been one, because the rules of this report is that everything had to come from peer-reviewed sources).

- I don’t think any reasonable person could use this data set in a serious study knowing what the authors knew. But reasonable people can disagree, though I will say that I think there is no ethical way anyone could have talked to the EIA in detail about this data and then used the 1992-1997 data.

Onward:

Thanks to the efforts of EIA, after they took over the responsibility of running the Department of Energy (DOE) data-collection process around 1997, it became more effective. Efforts were made in subsequent years to increase the response rate and upgrade the reporting form.

Thanks, you just proved my point about the trend being driven by changes in reporting and data collection intensity.

To adjust for potential response-rate biases, we have separated weather- and non-weather-related trends into indices and found an upward trend only in the weather-related time series.

As confirmed by EIA, if there were a systematic bias one would expect it to be reflected in both data series (especially since any given reporting site would report both types of events).

As an additional precaution, we focused on trends in the number of events (rather than customers affected) to avoid fortuitous differences caused by the population density where events occur. This, however, has the effect of understating the weather impacts because of EIA definitions (see survey methodology notes below).

Well, its possible this is true, though unhappily, this analysis was not published in the original report and was not published in this post. I presume this means he has a non-weather time series that is flat for this period. Love to see it, but this is not how the EIA portrayed the data to me. But it really doesn’t matter – I think the fact that there is more data missing in the early years than the later years is indisputable, and this one fact drives a false trend.

But here is what I think is really funny- — the above analysis does not matter, because he is assuming a reporting bias symmetry, but just a few paragraphs earlier he stated that there was actually an asymmetry. Let me quote him again:

EIA noted that there are probably a higher proportion of weather events missing from their time series than non-weather ones (due to minimum threshold impacts required for inclusion, and under-reporting in thunderstorm-prone regions of the heartland).

Look Dr. Mills, I don’t have an axe to grind here. This is one chart out of bazillions making a minor point. But the data set you are using is garbage, so why do you stand by it with such tenacity? Can’t anyone just admit “you know, on thinking about it, there are way to many problems with this data set to declare a trend exists. Hopefully the EIA has it cleaned up now and we can watch it going forward.” But I guess only “amateurs” make that kind of statement.

The blogger also speculated that many of the “extreme temperature” events were during cold periods, stating “if this is proof of global warming, why is the damage from cold and ice increasing as fast as other severe weather causes?” The statement is erroneous.

This was pure supposition in my first reaction to the chart. I later admitted that I was wrong. Most of the “temperature” effects are higher temperature. But I will admit it again here – that supposition was incorrect. He has a nice monthly distribution of the data to prove his point.

I am ready to leave this behind, though I will admit that Dr. Mills response leaves me more rather than less worried about the quality of the science here. But to summarize, everything is minor compared to this point: The caption says “The number of incidents caused by extreme weather has increased tenfold since 1992.” I don’t think anyone, knowing about the huge underreporting in early years, and better reporting in later years, thinks that statement is correct. Dr. Mills should be willing to admit it was incorrect.

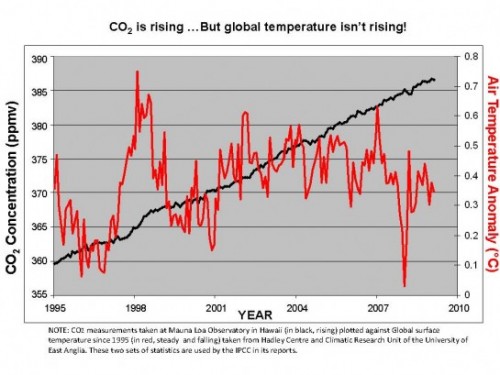

Update: In case I am not explaining the issue well, here is a conceptual drawing of what is going on:

Update #2: One other thing I meant to post. I want to thank Dr. Mills — this is the first time in quite a while I have received a critique of one of my posts without a single ad hominem attack, question about my source of funding, hypothesized links to evil forces, etc. Also I am sorry I wrote “Mr.” rather than “Dr.” Mills. Correction has been made.