A lot of folks have started to analyze the surface temperature record for urban biases. This site has linked a number of past analyses, and I’ve done some first-hand analysis of local surface temperature stations and measurements of the Phoenix urban heat island. My hypothesis is that as much as half of the historic warming signal of 0.7C or so in the surface temperature record is actually growing urban heat islands biasing measurement stations.

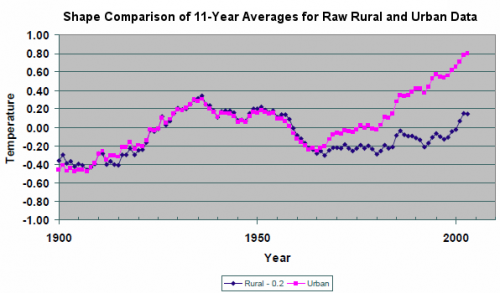

Edward Long took a selection of US measurement points from the NCDC master list and chose 48 rural and 48 urban locations (one for each of the lower-48 states). While I would like to see a test to ensure no cherry-picking went on, his results are pretty telling:

|

Station Set

|

oC/Century, 11-Year Average Based on the Use of

|

|

|

Raw Data

|

Adjusted Data

|

|

|

Rural (48)

|

0.11

|

0.58

|

|

Urban (48)

|

0.72

|

0.72

|

|

Rural + Urban (96)

|

0.47

|

0.65

|

More at Anthony Watt, who has this chart from the study:

The Reference Frame has more analysis as well.

If this data is representative of the whole data set, we see two phenomena that should not be news to readers of this site:

- Inclusion of biased urban data points may be contributing as much as 5/6 of the warming signal in the test period

- The homogenization and adjustment process, which is supposed to statistically correct for biases, seems to be correcting the wrong way, increasing clean sites to matched biased ones rather than vice versa (something I discussed years ago here)

The homogenization process has always bothered me. It is probably the best we can do if we don’t know which of two conflicting measurements are likely to be biased, but it makes no sense in this case, as we have a fair amount of confidence the rural location is likely better than the urban.

Let’s say you had two compasses to help you find north, but the compasses are reading incorrectly. After some investigation, you find that one of the compasses is located next to a strong magnet, which you have good reason to believe is strongly biasing that compass’s readings. In response, would you

- Average the results of the two compasses and use this mean to guide you, or

- Ignore the output of the poorly sited compass and rely solely on the other unbiased compass?

Most of us would quite rationally choose #2.

Most climate data bases go with approach #1.

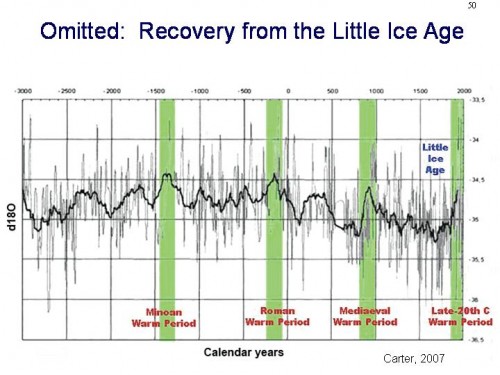

Let’s remind everyone why this matters: We are not going to eliminate past warming. The Earth was at one of its coldest periods in 5000 years through about 1800 and it has gotten warmer since. The reason it matter is twofold:

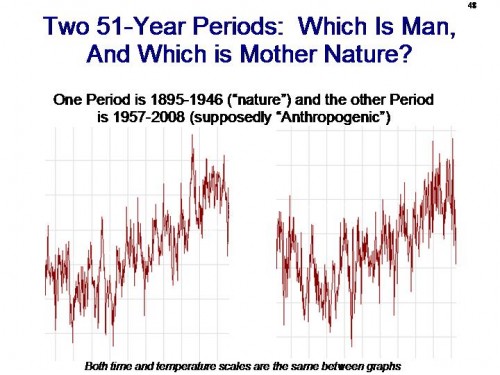

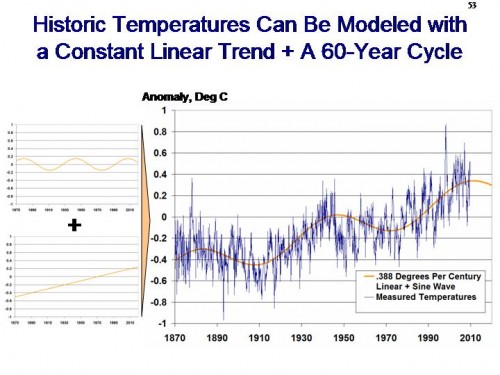

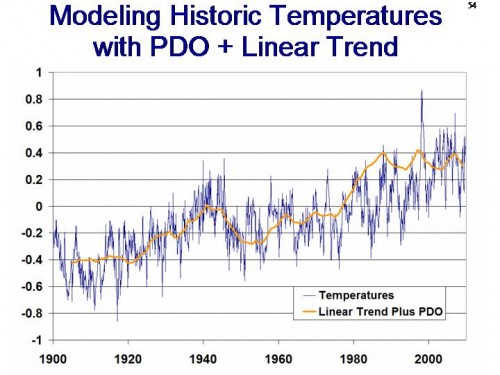

- The main argument for anthropogenic causes of warming is that the rise of late (particularly 1978 – 1998) has been so steep and swift that it couldn’t be anything else. This was always an absurd argument, because we have at least two periods in the last 150 years prior to most of our fossil fuel combustion where temperature rises were as fast and steep as 1978-1998. But if temperatures did not rise as much as we thought, this argument is further gutted.

- High sensitivity climate models have always had trouble back-casting history. Models that predict 5C of warming with a doubling have a difficult time replicating past warming of 0.6C for 40% of a doubling. If the 0.6C is really 0.3C, then someone might actually raise their hand and observe that the emperor has not clothes – ie, that based on history, high sensitivity models make no sense.