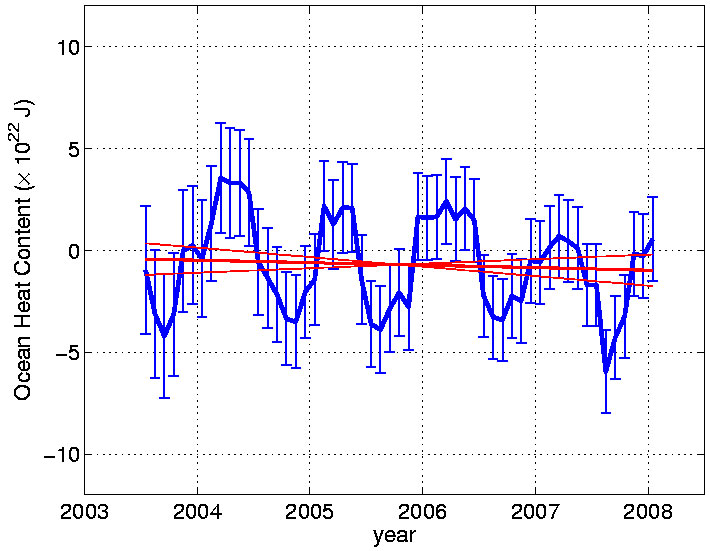

From Josh Willis, of the JPL, at Roger Pielke’s Blog:

we assume that all of the radiative imbalance at the top of the atmosphere goes toward warming the ocean (this is not exactly true of course, but we think it is correct to first order at these time scales).

This is a follow-up to Pielke’s discussion of ocean heat content as a better way to test for greenhouse warming, where he posited:

Heat, unlike temperature at a single level as used to construct a global average surface temperature trend, is a variable in physics that can be assessed at any time period (i.e. a snapshot) to diagnose the climate system heat content. Temperature not only has a time lag, but a single level represents an insignificant amount of mass within the climate system.

It is greenhouse gas effects that might create a radiative imbalance at the top of the atmosphere. Anyway, here is Willis’s results for ocean heat content.

Where’s the warming?

Com’on. You’re not being serious. A four year time span? And you expect to extract a 0.2ºC average per decade out of that? I say congrats for having an independent head and all, but please let’s not dwell on stupid.

I don’t think it’s stupid. If the CAGW hypothesis is correct, then as the level of CO2 increases, the total heat in the system should increase steadily and significantly.

clearly a four year trend does not demonstrate much in terms of broader climate.

this is an interesting idea though and i look forward to seeing how the data plays out over the coming years. it’s a direct, objective measure that is not susceptible to human influence (urban heat island) nor the vagaries of max/min averaging or wild near term extremes like a super el nino.

of note, it does seem to show one interesting thing:

we are not in the midst of a “tipping point” or some other radical acceleration of warming trends.

this would seem to imply that we have some time to assess climate issues and need not be hurried by those predicting imminent catastrophe.

I think Dr. Pieke’s point is OHC is one of the variables which we can evaluate on shorter timescales because the ‘weather noise’ is much lower. This means 4 years is long enough to draw some conclusions.

You can look at it another way. Warmers claim that CO2 is causing energy to accumulate in the earth system. The temperatures are declining so this heat is not in the air. The only other options are space or the oceans. If it is not in the oceans then the extra heat must be going into space. If it is going into space then there is nothing to worry about (this is what Roy Spenser says is happening with cloud feedbacks).

Wait, is that a chart with error bars? How refreshing.

Sea temperature readings to these depths, of this quality, is unprecedented. We really only have a relatively few years of good quality climate data. All the models until now have been based on crap.

Perhaps the commenters above are confusing ocean heat content with atmospheric temperature readings. Water has much higher heat content than air, and is much more resistant to change. Four years of reliable data on ocean heat is significant in terms of global heat content trends. And what you see is no trend except perhaps slight cooling. That is sehr significant.

Perhaps the latter commenter is trying to make a confusing case linking long term readings with uncertainties while claiming clear certainties and trends in a recent four year span with incredible noise in it. Perhaps he is confusing his own personal common sense with authorative scientific voice. Perhaps he is just another fool hanging around in the internets.

Anybody else, here, notice what looks like a one year time lag?

Wait, is that a chart with error bars? How refreshing.

Posted by: joshv

I thought it was open-hi-low-close.

How about a temp chart with Bollinger bands?

(4 years may not a trend make, but neither does bogus proxy measurement over 650,000* years…..)

*Please, enough with the proxy crap. It’s (mostly) crap.

Luis Dias – Actually, while his conclusion wasn’t quite right (A four year trend is just that – a four year trend, and, like a forty year trend, not necessarily to be taken seriously), his general point is pretty good; that this data looks to be of significantly higher quality than most of what we see.

To say anything meaningful here, somebody would have to take a look at his methodologies, which are much more important than having error bands when it comes to getting a right answer. (Although they’re both quite important; if you don’t have error bands, a 99% match still isn’t good enough, because you’ve claimed to be precisely correct.) Where the measurements were taken, what time of day, with what equipment, at what depths – what “corrections” took place once he was done.

Current AGW outcomes

– about 4% more see ice now than the mean. (National Snow and Ice Data Center sea ice Viewer)

– Lower temperatures since 1998 per the British Met Hadley Center

– Lower ocean temps since 2003 per this discussion (ARGOS)

– A stable trend of N Hemisphere snow cover looking at Dec to Feb data since 1966. The most snow cover in the N Hemisphere in Jan 2008 since 1966. Rutgers Center.

Doesn’t this add up to less heat in the ocean and less heat in the atmosphere?

As a laymen after looking at the data for three weeks i am fairly appalled that the White House Climate Report and Union of Concerned Scientists are declaring crisis and declaring it absolutely. This absolute ‘concensus’ looks more like corrupted absolute power.

I appreciate the challenging looks here.

Are we seeing the unraveling of the scientific story of the century?

Adirian, and who the fuck says that this “data” is of “higher quality than most of what we see”? A curious lone blogger? A lost avatar commenter on a skeptic’s blog? Why should I take seriously anyone that just arrives the scene claiming that “I have the best data in the world, and everyone else is just plain wrong, because I just know and you should trust me ’cause I’m also a skeptic, blink blink!”

I’ve seen too many “scientists” making such bogus claims, eternal motion machines and plain wrong science to recognize a rat when I see one.

But perhaps you just like the kool-aid. Drink it at your intellectual peril. I’ve once read a study that says that drinking kool aid produces brain cancer. IT must be right, it’s SCIENCE, haven’t you heard?

I don’t see why we have to argue about climate warming (or cooling.) We know the exact total irradiance (I recall something like 1176 watts/sq. meter) coming in and I would think we can measure the total energy leaving the earth in all frequencies, day and night. I think one of these is, if I recall correctly, the Bond albedo, but we would have to measure the night emission also. If the amount of energy coming in is greater than the total amount leaving, then, it seems to me, the earth is warming. And vice versa.

Of course there are a few miniscule effects: the energy of cosmic rays and meteorites passing through the atmosphere, heat coming up from the earth’s core via lava or plain ground heat, evaporative cooling as from loss of hydrogen and helium–the lighter atoms–from the top of the atmosphere, but wouldn’t these be almost zero?

Now, maybe people don’t talk about this direct approach because it is too difficult, I don’t know, It seems that it would take at least four satellites arranged in a tetrahedron to fully look down upon the earth and catch both day and night sides. Maybe the instrumentation is too difficult and they don’t really know how to measure all frequencies. And, of course, it could not be a one time measurement as the sun is irregular and varies about .1% as I recall. And, probably the weather and clouds would, likewise, cause variance in the total emissivity. So the answers would be time series integrals.

But, wouldn’t it theoretically put an end to all the speculation?

dnaxy,

The process is a little more messy than the AGW propagandists have claimed.

First, we don’t know the exact total irradiance. It varies quite markedly over the year because the Earth’s orbit is elliptical, and over the years and decades because the sun is also bobbing about in its dance with the heavier planets. The several different satellites come up with slightly different answers – they’re all within a few Watts of one another, but it’s a tricky measurement to calibrate. Because the radiation emitted and reflected from the Earth is not uniform in all directions. Because when you sum the time series, the errors add too. (If you’re lucky you just get a random walk – if not, a systematic error will accumulate.) And because the actual difference between input and output would be tiny even if AGW claims are correct. For any body in equilibrium the input and output balance, so it is only the rate of change you might detect. This is a number measured in fractions of a degree per decade.

It’s a measurement far beyond current capabilities. But yes, in theory it would settle the argument.